Introduction

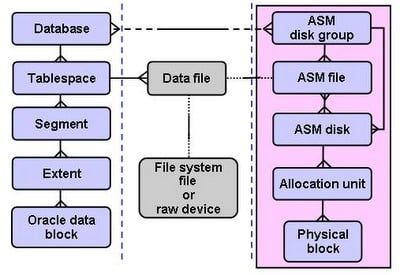

Block corruption is a common topic when we are dealing with any software that stores data. In Oracle Database there are several types of logical structures that are mapped to a physical file named “datafile” that is divided into filesystem blocks.

A block can have logical or physical corruption. A corrupt block is a block that has been changed so that it differs from what Oracle expects to find. A logical corruption is a block that has a valid checksum but its content is corrupt; for example, a row locked by a non-existent transaction, the amount of space used is not equal to block size, avsp bad, etc. Logical corruption can cause ORA-600 depending on which content inside the block is corrupted. A physical corruption is also called a media corruption; the database does not recognize the block at all, it’s when the problem is not related to the content but to the physical location or structure itself; for example, a bad header, a fractured/incomplete block, the block checksum is invalid, the block is misplaced, zeroed-out blocks, the header and footer of the block do not match, one of the key data block data structures is incorrect, such as the data block address (DBA), etc.

Detecting, monitoring and fixing corrupt blocks is an important task that we have take care of regularly and frequently. A corrupt block not only means a problem with the block but also means that there is data that may be lost, and this is very important for the business.

The Problem

The problem with corrupted blocks is that we don’t know they are corrupted until we try to use them. Of course, this applies to a scenario where we are not executing a proactive activity to detect corrupt blocks. For example, a table block can be corrupted and there is no way to know it until someone performs a SELECT or any other DML that reads that block. Once the block is read, Oracle will know the block is corrupted and then an ORA-0600, ORA-27047 or ORA-01578 will be returned to the user.

A long time ago a customer called me saying that they were trying to execute a SELECT from the application and whenever the SELECT was executed the application got an ORA-01578. I detected the block # and the datafile # and I fixed it. At that time, the user was able to continue working the rest of the day. However, the next day again, the same customer called me saying that they were receiving more ORA-01578’s. This time I confirmed that the corrupted block was in a different datafile than the block I’d fixed a day before. This made me think that there could be more corrupted blocks. I executed dbverify against the full database and I saw that the database had several corrupted blocks. However last rman backup didn’t report any corrupted blocks. We engaged a sysadmin and he detected that the storage was having issues that day. Fortunately we detected the storage problem quickly and no data was lost. But if these kinds of issues are not detected properly the data can be compromised. In this example we have been talking about a physical problem, but there are some other cases where it is more difficult to detect the problem, especially when it is a logical corruption.

How to avoid it

Using Oracle ASM: Oracle recommends using ASM as the storage for the database. ASM has three types of redundancy: External, Normal and High. If we are using Normal or High Oracle keeps a copy (Normal) or two copies (High) of every block. This block is called “Mirror Block” and whenever it finds a corrupt block, it automatically restores the corrupt block from one of its mirror copies. I have written an article where I explain with a lot of details how Oracle recover a block from its mirror copy, in case you want you read it: Data block recovering process using Normal Redundancy

Using parameter db_block_checking: This parameter is used to control whether block checking is done for transaction managed blocks. As early detection of corruptions is useful, and has only a small performance impact. However, there are some types of applications where having the parameter DB_BLOCK_CHECKING = TRUE can have a considerable overhead, all depends on the application, to test the change in a test environment is recommended. The immediate overhead is a CPU overhead of checking a block contents after each change but a secondary effect is than that this means blocks are held for longer periods of time so other sessions needing the current block image may have to wait longer. The actual overhead on any system depends heavily on the application profile and data layout.

Using parameter db_block_checksum: determines whether DBWn and the direct loader will calculate a checksum (a number calculated from all the bytes stored in the block) and store it in the cache header of every data block when writing it to disk. Checksums are verified when a block is read – only if this parameter is TYPICAL or FULL and the last write of the block stored a checksum. In FULL mode, Oracle also verifies the checksum before a change like update/delete statements and recomputes it after the change is applied. In addition, Oracle gives every log block a checksum before writing it to the current log. Checksums allow Oracle to detect corruption caused by underlying disks, storage systems, or I/O systems. If set to FULL, DB_BLOCK_CHECKSUM also catches in-memory corruptions and stops them from making it to the disk. Turning on this feature in TYPICAL mode causes only an additional 1% to 2% overhead. In FULL mode it causes 4% to 5% overhead.

Dbfsize: Can be used to check the consistency of Block 0.

Dbverify: Can be used to check Oracle datafiles for signs of corruption and give some degree of confidence that a datafile is free from corruption. It opens files in a read-only mode and so cannot change the contents of the file being checked. It checks that datafile has a valid header. Each data block in the file has a special “wrapper” which identifies the block – this “wrapper” is checked for correctness. Dbverify also checks that DATA (TABLE) and INDEX blocks are internally consistent. And, from 8.1.6 onwards, it checks that various other block types are internally consistent (such as rollback segment blocks).

RMAN VALIDATE command: You can use the VALIDATE command to manually check for physical and logical corruptions in database files. This command performs the same types of checks as BACKUP VALIDATE. By default, RMAN does not check for logical corruption. If you specify CHECK LOGICAL on the BACKUP command, however, then RMAN tests data and index blocks for logical corruption, such as corruption of a row piece or index entry.

RMAN> validate check logical database;

RMAN > validate database;

RMAN > validate backupset 11;

RMAN > validate datafile 2 block 11;

I have written some other articles related to RMAN and corrupt blocks, in case you want to read more about the issue

Conclusion

Perform proactive tasks to detect or avoid having physical and logical corruption, if the corruption is detected on time, the solution can be easily executed. Oracle offers several tools that we can use to detect, monitor, and fix corruption in the block. It is important to be aware of these type of problems so that our data is not compromised.

Start the discussion at forums.toadworld.com