Introduction

This use case article describes the recovery of a production Oracle database without the availability of the required critical archive log files after bulk and unexpected changes to the database had been performed. Unfortunately, there were no flashback technologies enabled to revert the changes and we had to initiate disaster recovery. In this article, we will see how the database can be recovered using a well-known (but undocumented) parameter and what decisions to make when the business is affected, along with a few best practices to avoid such situations.

Series of events

- Received request from a customer to restore their production database with the latest full backup and point-in-time recovery, anytime between 09/30/2017 11:30 PM and 10/01/2017 00:10 AM, because after that time there were bulk changes in the database which caused loss of data.

- In such cases, the first recovery option to try is to perform flashback, but these features were not enabled and hence this method was ruled out.

- There was no standby database with a delay so that we could failover the standby to primary. This database had no standby available for it.

- Incremental SCN could be performed after restoring the old backup as a new auxiliary instance in the development server and again, you would need to perform a refresh from a new database to the production database. [Could be considered as last option]

- Then the final option would be performing a fresh restore of the database from the latest backups.

- We reviewed the backup: Found once-weekly expdp backup and last backup ran on 09/27/2017

- This was not a feasible option because there would be three days of data loss with this backup.

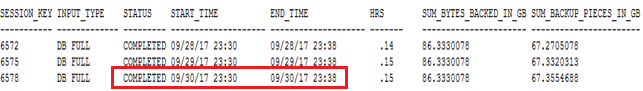

- We reviewed the backup: Found daily full RMAN backup, and the last backup ran on 09/30/2017

- RMAN Backup start time: 09/30/17 23:30

- RMAN Backup end time: 09/30/17 23:38

- Hence, we decided to use the latest backup completed on 09/30/17 (highlighted above)

- Database blackout was performed to avoid monitoring of the target.

- We took a fresh backup using expdp before destroying the database. (We were aware that this database backup was not useful for our solution, however we saved it in case it might be required for future purposes) – This is one notable step every DBA should consider.

- Dropped the database.

- Restored control file from a recent backup.

- Restored database as well.

At this point, we needed to perform the recovery using the archive logs generated during the RMAN backup to synchronize the SCN for all the data files and control files.

- Recovery using archive log files

We started recovery from SQL Plus to start to apply the available archive logs up to the timestamp of 10/01/2017 00:10.

SQL> alter database recover automatic using backup controlfile until cancel;

alter database recover automatic using backup controlfile until cancel

*

Nevertheless, the recover command during the first required sequence itself failed and was unable to identify or open the file. During the physical review of files, there were no archive log sequences available and no backups of archive logs found. Usually, the archive log backups are included along with the database backup, or the archive log backups are scheduled separately as per the requirement or the amount of redo generation.

Now we will walk through the backup strategy for the configured production database.

RMAN> run {execute script

The crontab job script calls the catalog script below.

RMAN> print script MSDB_custom_rman_disk_db_bkp;

printing stored script: MSDB_custom_rman_disk_db_bkp

{sql 'alter system archive log current';

allocate channel d1 type disk format 'G:rman01MSDBdatabaseMSDB_full_%U';

setlimit channel d1 maxopenfiles 1;

backup filesperset=32 full database;

release channel d1;

sql 'alter system archive log current';

}

Initially, the script “MSDB_custom_rman_disk_db_bkp” will perform a full backup and then immediately the deletion of archive logs follows. The deletion script does not keep even a single archive log backup whenever this command runs: “delete noprompt archivelog until time 'sysdate';”.

That means after the full backup all the archives deleted; the output from the backup log file is shown below.

sql statement: alter system archive log current

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=197 device type=DISK

deleted archived log

archived log file name=H:APPORACLEFLASH_RECOVERY_AREAMSDBARCHIVELOG17_09_30O1_MF_1_6283_DX0R9SK9_.ARC RECID=6222 STAMP=956100604

deleted archived log

archived log file name=H:APPORACLEFLASH_RECOVERY_AREAMSDBARCHIVELOG17_09_30O1_MF_1_6284_DX0RTHF0_.ARC RECID=6223 STAMP=956101135

Deleted 2 objects

Recovery Manager complete.

Therefore, there are no archive logs available nor is there any backup configured for archive logs.

Rescue Plan – Recovery Options

Now, what are the options left for us?

- Restore the bad backup, which took before database drop? – No use, as there were already bulk changes done.

- Use expdp of 27th September, with three days of data loss? – Three days of loss was not acceptable.

Critical and unsafe strategy

Apart from the above options, there is one more option left that is considered as a last resort, and there is no guarantee that the database can be recovered or usable. This procedure is widely used in scenarios like the one I have described. Personally, from the first time I tested this undocumented technique, back in the year 2011, to now, so far based on my experience I have been able to open the database in a functioning mode is 40 – 50% of the time. An undocumented parameter plays a key role in performing fake recovery with undocumented procedures.

Undocumented parameter: “_ALLOW_RESETLOGS_CORRUPTION = TRUE”, which we have to edit in pfile or update in spfile before opening the database. The actual and complete steps are as follows.

- Add the parameter “_ALLOW_RESETLOGS_CORRUPTION = TRUE” in pfile.

- SQL> startup mount

- SQL> alter database open resetlogs;

- Unset parameter “_ALLOW_RESETLOGS_CORRUPTION”

- Bounce the database

- Export and reimport into a fresh database

Disadvantages

- If the database is unable to open with reset logs then the database will not be usable even for recovery and we have to perform a fresh full restore.

- Example: Error 600 happened during database open, shutting down the database

- Even though the database opened, we may experience many errors related to accessing SQL or PL/SQL objects and there is an official bug for this.

- “Bug 3517065: ORA-600 [4146] AFTER OPENING DATABASE WITH ALLOW_RESETLOGS_CORRUPTION”

Final Call – What is next?

Now coming to the point, Is the undocumented procedure a good practice in critical production databases?

Or…

Are there any possibilities with the available backups? This entire restore and recover task became overhead with small mistakes with the configuration, which made things miserable. After all, I had made the decision to use this procedure, as there was no other option left. I was also aware that this procedure might work or fail with internal errors. However, I made my final call based on the SLA – Recovery Time Objective (RTO) and the criticality of the database.

1. Edited the PFILE and started the instance

Starting up:

Oracle Database 11g Release 11.2.0.3.0 - 64bit Production.

Windows NT Version V6.1 Service Pack 1

CPU : 4 - type 8664, 4 Physical Cores

Process Affinity : 0x0x0000000000000000

Memory (Avail/Total): Ph:5573M/8191M, Ph+PgF:9830M/16381M

VM name : VMWare Version (6)

Using parameter settings in server-side pfile H:APPORACLEPRODUCT.2.0DBHOME_2DATABASEINITMSDB.ORA

System parameters with non-default values:

processes = 150

event = ""

streams_pool_size = 64M

memory_target = 6000M

control_files = "H:APPORACLEORADATAMSDBCONTROL01.CTL"

control_files = "H:APPORACLEFLASH_RECOVERY_AREAMSDBCONTROL02.CTL"

db_block_size = 8192

compatible = "11.2.0.0.0"

cluster_database = FALSE

db_recovery_file_dest = "H:apporacleflash_recovery_area"

db_recovery_file_dest_size= 84G

_allow_resetlogs_corruption= TRUE

undo_tablespace = "UNDOTBS1"

undo_retention = 3000

remote_login_passwordfile= "EXCLUSIVE"

audit_sys_operations = TRUE

db_domain = ""

smtp_out_server = "SMTP.oracle-ckpt.com"

audit_file_dest = "H:APPORACLEADMINMSDBADUMP"

audit_trail = "DB"

audit_trail = "EXTENDED"

db_name = "MSDB"

open_cursors = 300

2. Database opened with Restlogs

SQL> startup mount

SQL> alter database open resetlogs;

Mon Oct 02 13:32:06 2017

ALTER DATABASE MOUNT

Successful mount of redo thread 1, with mount id 950819542

Database mounted in Exclusive Mode

Lost write protection disabled

Completed: ALTER DATABASE MOUNT

Mon Oct 02 13:32:20 2017

alter database open resetlogs

RESETLOGS is being done without consistancy checks. This may result

in a corrupted database. The database should be recreated.

RESETLOGS after incomplete recovery UNTIL CHANGE 2588214675543

SQL> select name,open_mode,resetlogs_change#,resetlogs_time from v$database;

NAME OPEN_MODE RESETLOGS_CHANGE# RESETLOGS_TIME

--------- -------------------- ----------------- --------------------

MSDB READ WRITE 2588214675544 02-OCT-2017 13:32:20

RMAN> list incarnation;

using target database control file instead of recovery catalog

List of Database Incarnations

DB Key Inc Key DB Name DB ID STATUS Reset SCN Reset Time

------- ------- -------- ----------- --- ---------- ----------

1 1 MSDB 777731056 PARENT 1 29-AUG-11

2 2 MSDB 777731056 PARENT 539256 14-MAY-12

3 3 MSDB 777731056 CURRENT 2588214675544 02-OCT-17

After the database opened using the fake recovery, it is necessary to recreate the database by exporting full back and re-importing.

Corrective action plan (CAP)

1. Correct the RMAN backup configuration including validating the backup.

RMAN> print script MSDB_custom_rman_disk_db_bkp;

printing stored script:

2. Review the RMAN backup configuration regularly at least once in a month

3. Perform Drill tests to restore using RMAN backup

4. Enable Flashback database with at least few hours of retention, so that performing a full restore task can be escaped.

5. DR instance should be available all time for the critical database so that with the previous backup and then with incremental roll forward this task can be achieved quickly.

Conclusion

In this practice, we had to make bold decisions because of minor mistakes in not setting up the RMAN backup configuration properly. Hence, it is a best practice to review backup configurations at least once monthly and highly recommended to perform DR test with the backups on different servers, to ensure the production database is safe in all circumstances and easily RTO achievable.

Start the discussion at forums.toadworld.com