Overview

In my last article, Oracle Multi-tenant Application Containers Part-III, we learnt about data sharing among containers in an application container by means of metadata-linked objects, data-linked objects, and extended data-linked objects. We already know that in an application container, the data in metadata-linked objects is specific to various application PDBs (pluggable databases) within the container. In order to view data in a metadata-linked table across various application PDBs, we can either connect to each PDB individually or create corresponding database links.

When it is required to aggregate data across multiple PDBs from a metadata-linked table that exists in the CDB root and multiple PDBs, the CONTAINERS clause introduced in Oracle database 12.1.0.2 comes in handy. When such a table is queried in the CDB root using the CONTAINERS clause, the table is accessed in the CDB root and in each of the opened PDBs, and a UNION ALL of the rows from the table is returned.

In Oracle Database 12.2.0.1, this concept has been extended to work in an application container. When a metadata-linked table is queried using the CONTAINERS clause in the application root, a UNION ALL of the table rows from the application root and all the opened application PDBs is returned. Thus, by leveraging the CONTAINERS clause, the user-created data can be aggregated across many application PDBs from one single place; i.e., the application root. If we need to retrieve data from a subset of the PDBs, we can include a filter on CON_ID or CON$NAME in the WHERE clause.

However, using the CONTAINERS clause like this has a drawback. When rows of tables are horizontally partitioned across PDBs based on a user-defined column (say region), queries requiring access to specific partition(s) of data need to access all the partitions even though rows are retrieved from a subset of partitions only.

This drawback can be overcome by using the CONTAINER Map, which is a single-column map table partitioned by list, hash, or range, created in the application root. It defines the column based on which data is partitioned across application PDBs. It indicates how rows in metadata-linked tables are partitioned across application PDBs. When a query using a CONTAINERS clause is received at the application root, the database server, based on the partitioning key passed to the query, uses the container map to route the query to the relevant application PDBs. This effectively causes the pruning away of other partitions, and therefore the application PDBs, thereby improving the performance of the query.

We can further set the CONTAINERS_DEFAULT attribute on any metadata-linked table so that queries issued in the application root use the CONTAINERS() clause by default for the database object.

In this article, I will demonstrate the:

- Use of the Containers clause in a query issued in the application root to aggregate data in a metadata-linked table across all/a subset of application PDBs

- Use of CONTAINER MAP with a query containing a containers clause

- To specify a filter on the user-defined column (region) based on which data is partitioned.

- To prune away irrelevant partitions when rows are retrieved from a subset of partitions only

- Enabling of the CONTAINERS_DEFAULT attribute for a table so that queries issued against it in the application root use the CONTAINERS() clause by default.

Current Scenario

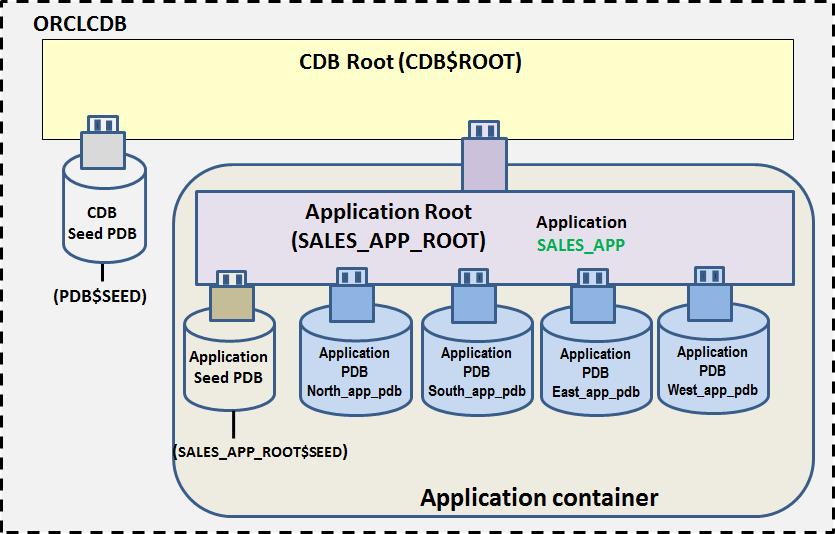

Here, we have an Oracle database 12.2.0.1 CDB called orclcdb as shown. Within this CDB, we have created an application container sales_app for the sales application of an organization. Also we have four application PDBs North_app_pdb, South_app_pdb, East_app_pdb and West_app_pdb, which are databases supporting the sales_app application for the various regional offices of the organization. While sharing the structure of the metadata-linked customers table stored in the application root sales_app_root, each application PDB can store region-specific customer data in the table.

Demonstration

Let us first connect to the CDB orclcdb and verify that there is one application container root sales_app_root in this CDB.

SQL> conn sys/oracle@orclcdb as sysdba

set sqlprompt CDB$ROOT>

sho con_name

CON_NAME

------------------------------

CDB$ROOT

CDB$ROOT>SELECT con_id, name, open_mode, application_root app_root,

application_pdb app_pdb, application_seed app_seed

from v$containers

where application_root = 'YES' and application_pdb = 'NO';

CON_ID NAME OPEN_MODE APP_ROOT APP_PDB APP_SEED

---------- --------------- ---------- -------- -------- --------

3 SALES_APP_ROOT READ WRITE YES NO NO

Connect to application root sales_app_rootand note that there are four application PDBs (north_app_pdb, east_app_pdb, west_app_pdb, south_app_pdb) and one seed PDB (sales_app_root$seed) associated with it.

SQL> conn sys/oracle@host01:1522/sales_app_root as sysdba

set sqlprompt SALES_APP_ROOT>

SALES_APP_ROOT>sho con_name

CON_NAME

------------------------------

SALES_APP_ROOT

SALES_APP_ROOT>@get_app_containers

CON_ID NAME OPEN_MODE APP_ROOT APP_PDB APP_SEED

---------- -------------------- ---------- -------- -------- --------

3 SALES_APP_ROOT READ WRITE YES NO NO

4 NORTH_APP_PDB READ WRITE NO YES NO

5 SOUTH_APP_PDB READ WRITE NO YES NO

6 WEST_APP_PDB READ WRITE NO YES NO

8 EAST_APP_PDB READ WRITE NO YES NO

9 SALES_APP_ROOT$SEED READ WRITE NO YES YES

6 rows selected.

Besides an implicit application, another application sales_app is currently installed in this container. All the application PDBs and the seed PDB are synced with application sales_app version 1.0.

SALES_APP_ROOT>@get_app_status

APP_NAME APP_VERSION APP_ID APP_STATUS IMPLICIT

------------------------------------- ------------ ------ ---------- --------

APP$5313F8AEAFA337B0E05364C909C08D65 1.0 2 NORMAL Y

SALES_APP 1.0 3 NORMAL N

SALES_APP_ROOT>@get_sales_app_pdb_status

NAME APP_NAME APP_VERSION APP_STATUS

-------------------- -------------------- ----------- ------------

NORTH_APP_PDB SALES_APP 1.0 NORMAL

WEST_APP_PDB SALES_APP 1.0 NORMAL

SALES_APP_ROOT$SEED SALES_APP 1.0 NORMAL

EAST_APP_PDB SALES_APP 1.0 NORMAL

SOUTH_APP_PDB SALES_APP 1.0 NORMAL

Let us check the common objects in application sales_app. Presently, there is a metadata-linked common application table sales_app_user.customerswith the structure as shown.

SALES_APP_ROOT>@get_sales_app_objects

APP_NAME OWNER OBJECT_NAME OBJECT_TYPE SHARING APPLICATION

---------- --------------- ------------ ----------- ------------ ------------

SALES_APP SALES_APP_USER CUSTOMERS TABLE METADATA LINK Y

SALES_APP_ROOT>desc sales_app_user.customers

Name Name Null? Type

----------------------- -------- ----------------

CUST_ID VARCHAR2(10)

CUST_NAME VARCHAR2(30)

CUST_ADD VARCHAR2(30)

CUST_ZONE CHAR(1)

Let us connect to each of the application PDBs to view the records in the table sales_app_user.customers. Note that the customer data is partitioned across the application PDBs based on the column CUST_ZONE. Currently, we have four records in the table in each of the application PDBs, as shown.

EAST_APP_PDB>select * from sales_app_user.customers;

CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- ---------------- ---------------------- -----------

E1 East_Cust_1 East_Cust_1_address E

E2 East_Cust_2 East_Cust_2_address E

E3 East_Cust_3 East_Cust_3_address E

E4 East_Cust_4 East_Cust_4_address E

WEST_APP_PDB>select * from sales_app_user.customers;

CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- --------------- ---------------------- ---------

W1 West_Cust_1 West_Cust_1_address W

W2 West_Cust_2 West_Cust_2_address W

W3 West_Cust_3 West_Cust_3_address W

W4 West_Cust_4 West_Cust_4_address W

NORTH_APP_PDB>select * from sales_app_user.customers;

CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- ----------------- ----------------------- ---------

N1 North_Cust_1 North_Cust_1_address N

N2 North_Cust_2 North_Cust_2_address N

N3 North_Cust_3 North_Cust_3_address N

N4 North_Cust_4 North_Cust_4_address N

SOUTH_APP_PDB>select * from sales_app_user.customers;

CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- ---------------- ------------------------ ---------

S1 South_Cust_1 South_Cust_1_address S

S2 South_Cust_2 South_Cust_2_address S

S3 South_Cust_3 South_Cust_3_address S

S4 South_Cust_4 South_Cust_4_address S

Instead of connecting to each application PDB to view records, now we will use the CONTAINERS clause to aggregate all rows from the four application PDBs while connected to the application root sales_app_root.

SALES_APP_ROOT>select * from containers(sales_app_user.customers) order by cust_id;

CUST_ID CUST_NAME CUST_ADD CUST_ZONE CON_ID

-------- --------------- ------------------------------ ---------- ----------

E1 East_Cust_1 East_Cust_1_address E 8

E2 East_Cust_2 East_Cust_2_address E 8

E3 East_Cust_3 East_Cust_3_address E 8

E4 East_Cust_4 East_Cust_4_address E 8

N1 North_Cust_1 North_Cust_1_address N 4

N2 North_Cust_2 North_Cust_2_address N 4

N3 North_Cust_3 North_Cust_3_address N 4

N4 North_Cust_4 North_Cust_4_address N 4

S1 South_Cust_1 South_Cust_1_address S 5

S2 South_Cust_2 South_Cust_2_address S 5

S3 South_Cust_3 South_Cust_3_address S 5

S4 South_Cust_4 South_Cust_4_address S 5

W1 West_Cust_1 West_Cust_1_address W 6

W2 West_Cust_2 West_Cust_2_address W 6

W3 West_Cust_3 West_Cust_3_address W 6

W4 West_Cust_4 West_Cust_4_address W 6

16 rows selected.

We can also specify a filter on the column CON_ID to get data from a subset of application PDBs.

SALES_APP_ROOT>select * from containers(sales_app_user.customers)

where con_id in (5,6);

CUST_ID CUST_NAME CUST_ADD CUST_ZONE CON_ID

-------- --------------- ------------------------------ ---------- ----------

S1 South_Cust_1 South_Cust_1_address S 5

S2 South_Cust_2 South_Cust_2_address S 5

S3 South_Cust_3 South_Cust_3_address S 5

S4 South_Cust_4 South_Cust_4_address S 5

W1 West_Cust_1 West_Cust_1_address W 6

W2 West_Cust_2 West_Cust_2_address W 6

W3 West_Cust_3 West_Cust_3_address W 6

W4 West_Cust_4 West_Cust_4_address W 6

8 rows selected.

However, if it is desired to fetch data from partitions corresponding to specific values in the partitioning column, it is advisable to specify a filter on the partitioning column; e.g., where CUST_ZONE in (‘S’, ‘W’) rather than CON_ID because a change in CON_ID may require application changes.

In Oracle Database 12.2, CONTAINERS() adds two more implicit columns, CON$NAME and CDB$NAME.

These are hidden columns and thus have to be explicitly referenced if their values are to be displayed. Consequently, we can query data from an application PDB by specifying its name also.

SALES_APP_ROOT>select CDB$NAME, CON_ID, CON$NAME, CUST_ID, CUST_NAME,

CUST_ADD,CUST_ZONE ZONE

from containers(sales_app_user.customers)

where con$name = 'SOUTH_APP_PDB';

CDB$NAME CON_ID CON$NAME CUST_ID CUST_NAME CUST_ADD ZONE

-------- ------- ------------- ------- ------------ -------------------- ----

orclcdb 5 SOUTH_APP_PDB S1 South_Cust_1 South_Cust_1_address S

orclcdb 5 SOUTH_APP_PDB S2 South_Cust_2 South_Cust_2_address S

orclcdb 5 SOUTH_APP_PDB S3 South_Cust_3 South_Cust_3_address S

orclcdb 5 SOUTH_APP_PDB S4 South_Cust_4 South_Cust_4_address S

Let us retrieve customer records for the South zone only and check the corresponding execution plan.

SALES_APP_ROOT>select CON_ID, CON$NAME, CUST_ID, CUST_NAME,

CUST_ADD,CUST_ZONE ZONE

from containers(sales_app_user.customers)

where cust_zone = 'S';

CON_ID CON$NAME CUST_ID CUST_NAME CUST_ADD ZONE

------- ------------- ------- ------------ -------------------- ----

5 SOUTH_APP_PDB S1 South_Cust_1 South_Cust_1_address S

5 SOUTH_APP_PDB S2 South_Cust_2 South_Cust_2_address S

5 SOUTH_APP_PDB S3 South_Cust_3 South_Cust_3_address S

5 SOUTH_APP_PDB S4 South_Cust_4 South_Cust_4_address S

SALES_APP_ROOT>select * from table(dbms_xplan.display_cursor);

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------

SQL_ID 675zb5bfqqtrn, child number 0

-------------------------------------

select CON_ID, CON$NAME, CUST_ID, CUST_NAME,

CUST_ADD,CUST_ZONE ZONE from

containers(sales_app_user.customers) where cust_zone = 'S'

Plan hash value: 1360703638

------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4 (100)| | | |

| 1 | PARTITION LIST ALL| | 1100 | 132K| 4 (100)| 00:00:01 | 1 | 6 |

|* 2 | CONTAINERS FULL | CUSTOMERS | 1100 | 132K| 4 (100)| 00:00:01 | | |

------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - filter("CUST_ZONE"='S')

21 rows selected.

As can be seen, although the rows of the customers table are horizontally partitioned across PDBs based on cust_zonecolumn and only one PDB (south_app_pdb) needs to be accessed to retrieve the desired rows, all the partitions are being accessed. To overcome this drawback, we can create a container map table which will inform the database server about the partitioning strategy so that a query would be appropriately routed to the relevant application PDB(s).

The container map table is a single-column table created in the application root. The column name should match the column being used for partitioning the data in the metadata-linked table being queried. The map table is partitioned by list, hash, or range on its only column. The names of the partitions of the map table should match the names of the corresponding application PDBs in the container.

Here, we will create a list-partitioned map table named sales_app_user.map_table that creates a partition for each cust_zone, and zone code (‘E’, ‘W’, ‘N’, ‘S’) is used to determine the zone. The partitions are named east_app_pdb, west_app_pdb, north_app_pdb and south_app_pdb corresponding to the names of the application PDBs.

SALES_APP_ROOT>CREATE TABLE sales_app_user.map_table

(cust_zone char(1))

PARTITION BY LIST (cust_zone)

( PARTITION east_app_pdb VALUES ('E'),

PARTITION west_app_pdb VALUES ('W'),

PARTITION north_app_pdb VALUES ('N'),

PARTITION south_app_pdb VALUES ('S'));

Table created.

Next, while connected to the application root, we will set the database property container_map for the application container to the name of map table.

SALES_APP_ROOT>ALTER DATABASE SET container_map='sales_app_user.map_table';

Database altered.

SALES_APP_ROOT>select * from database_properties

where property_name = 'CONTAINER_MAP';

PROPERTY_NAME PROPERTY_VALUE DESCRIPTION

--------------- ----------------------------------- --------------------------

CONTAINER_MAP SALES_APP_USER.MAP_TABLE value of container mapping

SALES_APP_ROOT> SELECT container_map, container_map_object , table_name

FROM dba_tables

WHERE container_map_object = 'YES';

CONTAINER_MAP CONTAINER_MAP_OBJECT TABLE_NAME

--------------- ------------------------- ----------

NO YES MAP_TABLE

Although we have set the map table for the container, the queries against the table sales_app_user.customerscannot use the container map as it has not been enabled to collaborate with the container map table (container_map = 'NO')

SALES_APP_ROOT>SELECT container_map, container_map_object, table_name

FROM dba_tables

WHERE owner='SALES_APP_USER' and table_name = ‘CUSTOMERS’;

CONTAINER_MAP CONTAINER_MAP_OBJECT TABLE_NAME

--------------- ------------------------- ----------

NO NO CUSTOMERS

Let us enable the sales_app_user.customers table to collaborate with the container map table as part of application upgrade. This results in setting of the CONTAINER_MAP attribute on the table.

SALES_APP_ROOT>ALTER PLUGGABLE DATABASE APPLICATION sales_app

begin UPGRADE '3.0' TO '3.1';

ALTER TABLE sales_app_user.customers ENABLE container_map;

ALTER PLUGGABLE DATABASE APPLICATION sales_app

end UPGRADE TO '3.1';

SALES_APP_ROOT>SELECT containers_default, container_map,

container_map_object, table_name

FROM dba_tables

WHERE owner='SALES_APP_USER'

and table_name = 'CUSTOMERS';

CONTAINERS_DEFAULT CONTAINER_MAP CONTAINER_MAP_OBJECT TABLE_NAME

-------------------- --------------- ------------------------- ----------

NO YES NO CUSTOMERS

Synchronize all the application PDBs with the upgraded application.

EAST_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

WEST_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

NORTH_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

SOUTH_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

SALES_APP_ROOT>@get_sales_app_pdb_status

NAME APP_NAME APP_VERSION APP_STATUS

-------------------- -------------------- ----------- ------------

EAST_APP_PDB SALES_APP 3.1 NORMAL

WEST_APP_PDB SALES_APP 3.1 NORMAL

SALES_APP_ROOT$SEED SALES_APP 2.0 NORMAL

NORTH_APP_PDB SALES_APP 3.1 NORMAL

SOUTH_APP_PDB SALES_APP 3.1 NORMAL

Now, if we issue a containers query to retrieve customer records for a subset of zones and check the corresponding execution plan, it can be seen that the query is routed to only those PDB(s) which contain(s) the required data.

----

PARTITION LIST SINGLE

----

SALES_APP_ROOT>select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,CUST_ZONE

from containers(sales_app_user.customers)

where cust_zone = 'E';

CON_ID CON$NAME CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- --------------- -------- ----------- ------------------- -----------

8 EAST_APP_PDB E1 East_Cust_1 East_Cust_1_address E

8 EAST_APP_PDB E2 East_Cust_2 East_Cust_2_address E

8 EAST_APP_PDB E3 East_Cust_3 East_Cust_3_address E

8 EAST_APP_PDB E4 East_Cust_4 East_Cust_4_address E

SALES_APP_ROOT>select * from table(dbms_xplan.display_cursor);

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------

SQL_ID 089fr84hdnbzz, child number 0

-------------------------------------

select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,

CUST_ZONE from containers(sales_app_user.customers)

where cust_zone = 'E'

Plan hash value: 4235726083

------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4 (100)| | | |

| 1 | PARTITION LIST SINGLE| | 1000 | 120K| 4 (100)| 00:00:01 | KEY | KEY |

| 2 | CONTAINERS FULL | CUSTOMERS | 1000 | 120K| 4 (100)| 00:00:01 | | |

------------------------------------------------------------------------------

16 rows selected.

----

PARTITION LIST INLIST

----

SALES_APP_ROOT>select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,CUST_ZONE

from containers(sales_app_user.customers)

where cust_zone in ('E','W');

CON_ID CON$NAME CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- ------------ -------- ----------- ------------------- ----------

8 EAST_APP_PDB E1 East_Cust_1 East_Cust_1_address E

8 EAST_APP_PDB E2 East_Cust_2 East_Cust_2_address E

8 EAST_APP_PDB E3 East_Cust_3 East_Cust_3_address E

8 EAST_APP_PDB E4 East_Cust_4 East_Cust_4_address E

6 WEST_APP_PDB W1 West_Cust_1 West_Cust_1_address W

6 WEST_APP_PDB W2 West_Cust_2 West_Cust_2_address W

6 WEST_APP_PDB W3 West_Cust_3 West_Cust_3_address W

6 WEST_APP_PDB W4 West_Cust_4 West_Cust_4_address W

8 rows selected.

SALES_APP_ROOT>select * from table (dbms_xplan.display_cursor);

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------

SQL_ID 5004rv204w2vq, child number 0

-------------------------------------

select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,

CUST_ZONE from containers(sales_app_user.customers)

where cust_zone in ('E','W')

Plan hash value: 2821151291

------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4 (100)| | | |

| 1 | PARTITION LIST INLIST| | 1000 | 120K| 4 (100)| 00:00:01 |KEY(I) |KEY(I) |

| 2 | CONTAINERS FULL | CUSTOMERS | 1000 | 120K| 4 (100)| 00:00:01 | | |

------------------------------------------------------------------------------

16 rows selected.

--------------------

PARTITION LIST ALL

--------------------

SALES_APP_ROOT>select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,CUST_ZONE

from containers(sales_app_user.customers);

CON_ID CON$NAME CUST_ID CUST_NAME CUST_ADD CUST_ZONE

---------- ------------- -------- ------------- -------------------- ----------

8 EAST_APP_PDB E1 East_Cust_1 East_Cust_1_address E

8 EAST_APP_PDB E2 East_Cust_2 East_Cust_2_address E

8 EAST_APP_PDB E3 East_Cust_3 East_Cust_3_address E

8 EAST_APP_PDB E4 East_Cust_4 East_Cust_4_address E

6 WEST_APP_PDB W1 West_Cust_1 West_Cust_1_address W

6 WEST_APP_PDB W2 West_Cust_2 West_Cust_2_address W

6 WEST_APP_PDB W3 West_Cust_3 West_Cust_3_address W

6 WEST_APP_PDB W4 West_Cust_4 West_Cust_4_address W

4 NORTH_APP_PDB N1 North_Cust_1 North_Cust_1_address N

4 NORTH_APP_PDB N2 North_Cust_2 North_Cust_2_address N

4 NORTH_APP_PDB N3 North_Cust_3 North_Cust_3_address N

4 NORTH_APP_PDB N4 North_Cust_4 North_Cust_4_address N

5 SOUTH_APP_PDB S1 South_Cust_1 South_Cust_1_address S

5 SOUTH_APP_PDB S2 South_Cust_2 South_Cust_2_address S

5 SOUTH_APP_PDB S3 South_Cust_3 South_Cust_3_address S

5 SOUTH_APP_PDB S4 South_Cust_4 South_Cust_4_address S

16 rows selected.

SALES_APP_ROOT>select * from table (dbms_xplan.display_cursor);

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------SQL_ID dvwusujgvrbx4, child number 0

-------------------------------------

select CON_ID, con$name, CUST_ID, CUST_NAME, CUST_ADD,

CUST_ZONE from containers(sales_app_user.customers)

Plan hash value: 1360703638

------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 3 (100)| | | |

| 1 | PARTITION LIST ALL| | 100K| 11M| 3 (100)| 00:00:01 | 1 | 4 |

| 2 | CONTAINERS FULL | CUSTOMERS | 100K| 11M| 3 (100)| 00:00:01 | | |

------------------------------------------------------------------------------

It is worth mentioning here that when the container map is in use, the rows in a metadata-linked table inserted in the application root, if any, are not displayed.

We can further upgrade our application to enable the table sales_app_user.customers to be used without the CONTAINERS() clause so that a query against the table will use the CONTAINERS() clause by default even when the CONTAINERS clause is not explicitly specified. This will result in setting the CONTAINERS_DEFAULT attribute on the table.

SALES_APP_ROOT>ALTER PLUGGABLE DATABASE APPLICATION sales_app

begin UPGRADE '3.1' TO '3.2';

ALTER TABLE sales_app_user.customers ENABLE containers_default;

ALTER PLUGGABLE DATABASE APPLICATION sales_app

end UPGRADE TO '3.2';

SALES_APP_ROOT>SELECT containers_default, container_map, container_map_object,

table_name

FROM dba_tables

WHERE owner='SALES_APP_USER' and table_name = 'CUSTOMERS';

CONTAINERS_DEFAULT CONTAINER_MAP CONTAINER_MAP_OBJECT TABLE_NAME

-------------------- --------------- ------------------------- ----------

YES YES NO CUSTOMERS

Let us synchronize all the application PDBs with the upgraded application.

EAST_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

WEST_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

NORTH_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

SOUTH_APP_PDB>alter pluggable database application sales_app sync;

Pluggable database altered.

SALES_APP_ROOT>@get_sales_app_pdb_status

NAME APP_NAME APP_VERSION APP_STATUS

-------------------- -------------------- ----------- ------------

WEST_APP_PDB SALES_APP 3.2 NORMAL

NORTH_APP_PDB SALES_APP 3.2 NORMAL

SALES_APP_ROOT$SEED SALES_APP 2.0 NORMAL

SOUTH_APP_PDB SALES_APP 3.2 NORMAL

EAST_APP_PDB SALES_APP 3.2 NORMAL

15 rows selected.

Now, if we issue a query against the sales_app_user.customerstable without a containers clause, CONTAINER_MAP and CONTAINERS_DEFAULT are used together. Whereas CONTAINER_DEFAULT causes the query to be automatically transformed into a containers query, CONTAINER_MAP causes the pruning of partitions and therefore the application PDBs, based on the key that is passed to the query. Note that it is not mandatory to use CONTAINERS_DEFAULT with CONTAINER_MAP.

SALES_APP_ROOT>select * from sales_app_user.customers where cust_zone = 'E';

CUST_ID CUST_NAME CUST_ADD CUST_ZONE CON_ID

-------- --------------- ------------------------------ ---------- ----------

E1 East_Cust_1 East_Cust_1_address E 8

E2 East_Cust_2 East_Cust_2_address E 8

E3 East_Cust_3 East_Cust_3_address E 8

E4 East_Cust_4 East_Cust_4_address E 8

SALES_APP_ROOT>select * from table (dbms_xplan.display_cursor);

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------

SQL_ID 0jrv800mb5chy, child number 0

-------------------------------------

select * from sales_app_user.customers where cust_zone = 'E'

Plan hash value: 4235726083

------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4 (100)| | | |

| 1 | PARTITION LIST SINGLE| | 1100 | 62700 | 4 (100)| 00:00:01 | KEY | KEY |

| 2 | CONTAINERS FULL | CUSTOMERS | 1100 | 62700 | 4 (100)| 00:00:01 | | |

------------------------------------------------------------------------------

14 rows selected.

Note:

- Container maps can be created in the CDB root, but the best practice is to create them in application roots.

- One application container can have one container map only.

- Data loaded into the PDBs’ tables must be consistent with the partitions defined in the map object.

- When an application PDB that is referenced in a map object is unplugged, renamed, or dropped, the map object must be updated manually to account for such changes.

Summary

- By leveraging the CONTAINERS clause, user-created data across many application PDBs can be aggregated from one single place; i.e., the application root.

- When the CONTAINERS clause is used without a container map, queries requiring access to specific partitions of data need to access all the partitions even though rows are retrieved from a subset of partitions only.

- Use of CONTAINER MAP with a query containing a containers clause causes the pruning away of irrelevant partitions when rows are retrieved from a subset of partitions only.

- Enabling of the CONTAINERS_DEFAULT attribute for a table causes queries issued against it in the application root to use the CONTAINERS() clause by default.

References

http://www.oracle.com/technetwork/database/multitenant/overview/multitenant-wp-12c-2078248.pdf

https://docs.oracle.com/database/121/NEWFT/chapter12102.htm#NEWFT507

Scripts used in this article

get_app_containers.sql

— Find out the containers in an application root when executed from application root

SELECT con_id, name, open_mode, application_root app_root,

application_pdb app_pdb, application_seed app_seed

from v$containers

order by con_id;

get_app_status.sql

— Find out the status of various applications in an application container when executed from an application root

select app_name, app_version, app_id, app_status, app_implicit implicit from dba_applications;

get_sales_app_pdb_status

— Find out various versions of application SALES_APP and synchronization status of various application PDBs with it. Execute from application root.

SELECT c.name,

aps.app_name,

aps.app_version,

aps.app_status

FROM dba_app_pdb_status aps

JOIN v$containers c ON c.con_uid = aps.con_uid

WHERE aps.app_name = 'SALES_APP';

get_sales_app_objects

— Find out objects belonging to sales_app application.

select app.app_name, obj.owner, obj.object_name, obj.object_type,

obj.sharing, obj.application

from dba_objects obj, dba_applications app

where obj.owner in

(select username from dba_users

where oracle_maintained = 'N')

and obj.application = 'Y'

and obj.created_appid = app.app_id;

----------------------------------------------------------------------------------------------------------------------------------------

Start the discussion at forums.toadworld.com