Have you have found running a workflow in Oracle Data Miner slow or running the scripts in the database slow ?

No. Good, because I haven't found it slow.

But (there is always a but) it really depends on the volume of data your are dealing with. For the vast majority of us who aren't of the size of google, amazon, etc have data volumes that are not that large really and a basic server can process many millions of records extremely quickly using Oracle Data Mining.

But what if we have a large volume of data. In one recent project I had a data set containing over 3.5 billion records. Now that is big data. All of this data sitting in an Oracle Database.

So how can we process over 3.5 billion records in a couple of seconds, building 4 machine learning models in that time? Is that really possible with just using an Oracle Database? Yes is the answer and very easily. (Surely I needed Hadoop and Spark to process this data? Nope!)

The Oracle Data Miner (ODMr) tool comes with a new feature in SQL Developer 4 (and higer) that allows you to manage using Parallel execution and the in-memory DB features. These can be accessed on the ODMr Worksheet tool bar.

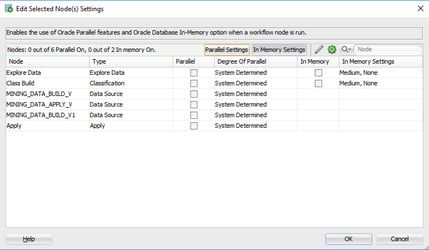

The best time to look at these setting is when you have created your workflow and are ready to run it for the first time. When you click on the 'Performance Options' link, you will get the following window. It will display the list of nodes you have in the workflow and will then indicate if the Degree of Parallel and the In-Memory options can be set for each of the nodes.

The default values are shown and you can changes these. For example, in a lot of scenarios you might prefer to leave the Degree of Parallel as System Determined. This will then use whatever the the default is for the database and controlled by the DBA, but if you want to specify a particular value then you can, for example setting the degree of parallel to 4 for the 'Class Build' node, in the above image. Similarly for the in-memory option, this will only be available for nodes where the in-memory option would be applicable. This will be where there is a lot of data processing (preparing data, transforming data, performing specific statistics, etc) and for storing any data that is generated by Oracle Data Mining.

But what if you want to change the default values. You can change these at a global level within the SQL Developer Preferences. Here you can set the default to be used for each of the different types of Oracle Data Mining nodes.

I mentioned at the start that I've been able to build 4 machine learning models using Oracle Data Mining on a data set of over 3.5 billion records, all in a couple of seconds. In my scenario Parallel was set to 16 and we didn't use in-memory as we didn't have the licence for it. You can see that machine learning at lighting speed (ish) is possible. This timing is only for building the models, which is the step that consumes the most about of resources and time. When it comes to scoring the data, that is lighting fast. In may scenario, scoring over 300,000 was less than a second, and I didn't use parallel or anything else to speed things up. Because we didn't need to.

Go give it a try!

Start the discussion at forums.toadworld.com