- Reduction in time-to-market

- Application Updates in real-time

- Reliable releases

- Improved product quality

- Improved Agile environment

What is DevOps?

Agile software development is based around adaptive software development, continuous improvement and early delivery. But one of the fallouts of Agile has been an increase in the number of releases, to the point where they can’t always be implemented reliably and quickly.

In response to this situation, the objective of DevOps is to establish organizational collaboration between the various teams involved in software delivery and to automate the process of software delivery so that a new release can be tested, deployed and monitored continuously.

DevOps is derived from combining Development and Operations. It seeks to automate processes and involves collaboration between software developers, operations and quality assurance teams. Using a DevOps approach with Docker can create a continuous software delivery pipeline from GitHub code to application deployment.

How Can DevOps Be Used With Docker?

A Docker container is run by using a Docker image, which may be available locally or on a repository such as the Docker Hub. Suppose, as a use case, that MySQL database or some other database makes new releases available frequently with minor bug fixes or patches. How to make the new release available for end users without a time lag?

Docker images are associated with code repositories–a GitHub code repo or some other repository such as AWS CodeCommit. If a developer were to build a Docker image from the GitHub code repo and make it available on Docker Hub to end users, and if the end users were to deploy the Docker image as Docker containers it would involve several individually run phases:

- Build the GitHub Code into a Docker Image (with docker buildcommand).

- Test the Docker Image (with docker runcommand).

- Upload the Docker Image to Docker Hub (with docker pushcommand).

- End user downloads the Docker image (with docker pullcommand).

- End user runs a Docker container (with docker runcommand).

- End user deploys an application (using AWS Elastic Beanstalk, for example).

- End user monitors the application.

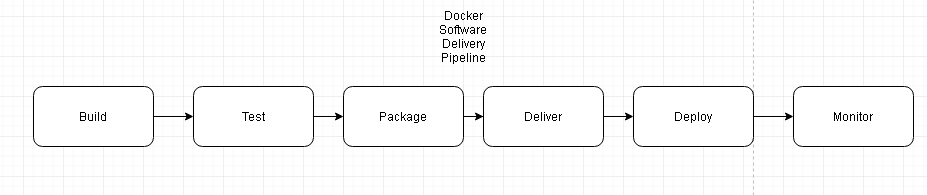

The GitHub to Deployment pipeline is shown in following illustration.

As a new MySQL database release becomes available with new bug fixes over a short duration (which could be a day) the complete process would need to be repeated.

But a DevOps approach could be used to make the Docker image pipeline from GitHub to Deployment continuous and not require user or administrator intervention.

“A software design pattern is a general reusable solution to a commonly occurring problem" – Wikipedia. The DevOps design patterns are centered on continuous code integration, continuous software testing, continuous software delivery, and continuous deployment. Automation, collaboration, and continuous monitoring are some of the other design patterns used in DevOps.

Continuous Code Integration

Continuous code integration is the process of continuously integrating source code into a new build or release. The source code could be in a local repository or on GitHub or AWS CodeCommit.

Continuous Software Testing

Continuous software testing is continuous testing of a new build or release. Tools such as Jenkins provide several features for testing; for instance, user input at each phase of a Jenkins Pipeline. Jenkins provides plugins such as Docker Build Step Plugin for testing each Docker application phase separately: running a Docker container, uploading a Docker image and stopping a Docker container.

Continuous Software Delivery

Continuous software delivery is making new software builds available to end users for deployment in production. For a Docker application, continuous delivery involves making each new version/tag of a Docker image available on a repository such as Docker Hub or an Amazon EC2 Container repository.

Continuous Software Deployment

Continuous software deployment involves deploying the latest release of a Docker image continuously, such that each time a new version/tag of a Docker image becomes available the Docker image gets deployed in production. Kubernetes Docker container manager already provides features such as rolling updates to update a Docker image to the latest without interruption of service. The use of Jenkins rolling updates may be automated, such that each time a new version/tag of a Docker image becomes available the Docker image deployed is updated continuously.

Continuous Monitoring

Continuous monitoring is the process of monitoring a running application. Tools such as Sematext can be used to monitor a Docker application. A Sematext Docker Agent can be deployed to monitor Kubernetes cluster metrics and collect logs.

Automation

One type of automation that can be made for Docker applications is to automate the installation of tools such as Kubernetes, whose installation is quite involved. Kubernetes 1.4 includes a new tool called “kubeadm” to automate the installation of Kubernetes on Ubuntu and CentOS. The kubeadm tool is not supported on CoreOS.

Collaboration

Collaboration involves cross-team work and sharing of resources. As an example, different development teams could be developing code for different versions (tags) of a Docker image on a GitHub repository and all the Docker image tags would be built and uploaded to Docker Hub simultaneously and continuously. Jenkins provides the Multibranch Pipeline project to build code from multiple branches of a repository such as the GitHub repository.

Another example of collaboration involves Helm charts, pre-configured Kubernetes resources that can be used directly. For example, pre-configured Kubernetes resources for MySQL database are available as Helm charts in the kubernetes/charts repository and helm/charts repository. Helm charts eliminate the need to develop complex applications or perform complex upgrades to software that has already been developed.

What are some of the DevOps Tools?

Jenkins

Jenkins is a commonly used automation and continuous delivery tool that can be used to build, test and deliver Docker images continuously. Jenkins provides several plugins that can be used with Docker, including Docker Plugin, Docker Build Step Plugin, and Amazon EC2 Plugin. With Amazon EC2 Plugin, a Cloud configuration can be used to provision Amazon EC2 instances dynamically for Jenkins Slave agents. Docker Plugin can be used to configure a cloud to run a Jenkins project in a Docker container. Docker Build Step Plugin is used to test a Docker image for individual phases, such as to build a Docker image, run a container, push a Docker image to Docker hub and stop and remove a Docker container. Jenkins itself may be run as a Docker container using the Docker image “jenkins”.

AWS CodeCommit and AWS CodeBuild

Amazon AWS provides several tools for DevOps.

AWS CodeCommit is a version control service similar to GitHub to store and manage source code files. AWS CodeBuild is a DevOps tool to build and test code. The code to be built can be integrated continuously from the GitHub or CodeCommit and the output Docker image from CodeBuild can be uploaded to Docker Hub or to Amazon EC2 Container Registry as a build completes. What CodeBuild provides is an automated and continuous process for the Build, Test, Package and Deliver phases shown in the Pipeline flow diagram in this article. Alternatively, CodeBuild output could be stored in an Amazon S3 Bucket.

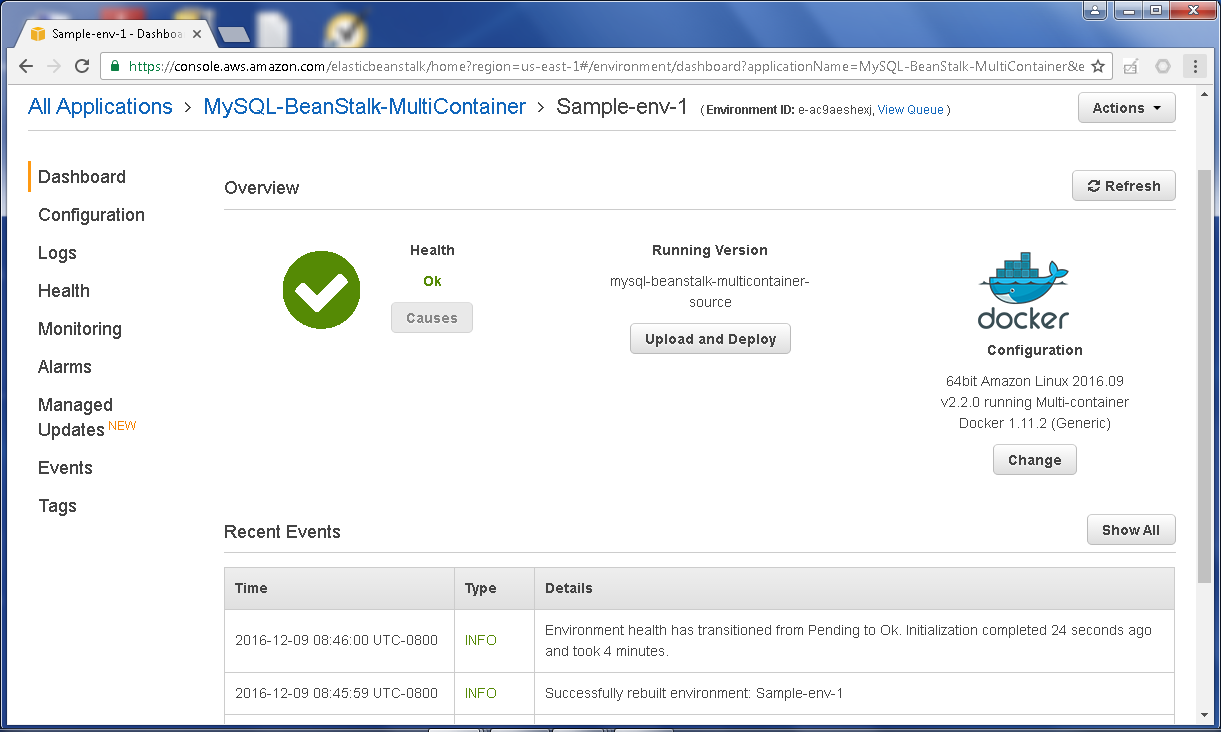

AWS Elastic Beanstalk

AWS Elastic Beanstalk is another AWS DevOps tool, used for deploying and scaling Docker applications on the cloud. AWS Beanstalk provides automatic capacity provisioning, load balancing, scaling and monitoring for Docker application deployments. A Beanstalk application and environment can be created from a Dockerfile packaged as a zip file that includes the other application resources, or just from an unpackaged Dockerfile if not including other resource files. Alternatively, the configuration for a Docker application including the Docker image and environment variables could be specified in a Dockerrun.aws.jsonfile. An example Dockerrun.aws.jsonfile is listed in which multiple containers are configured, one of which is for MySQL database and the other for nginx server:

{

"AWSEBDockerrunVersion": 2,

"volumes": [

{

"name": "mysql-app",

"host": {

"sourcePath": "/var/app/current/mysql-app"

}

},

{

"name": "nginx-proxy-conf",

"host": {

"sourcePath": "/var/app/current/proxy/conf.d"

}

}

],

"containerDefinitions": [

{

"name": "mysql-app",

"image": "mysql",

"environment": [

{

"name": "MYSQL_ROOT_PASSWORD",

"value": "mysql"

},

{

"name": "MYSQL_ALLOW_EMPTY_PASSWORD",

"value": "yes"

},

{

"name": "MYSQL_DATABASE",

"value": "mysqldb"

},

{

"name": "MYSQL_PASSWORD",

"value": "mysql"

}

],

"essential": true,

"memory": 128,

"mountPoints": [

{

"sourceVolume": "mysql-app",

"containerPath": "/var/mysql",

"readOnly": true

}

]

},

{

"name": "nginx-proxy",

"image": "nginx",

"essential": true,

"memory": 128,

"portMappings": [

{

"hostPort": 80,

"containerPort": 80

}

],

"links": [

"mysql-app"

],

"mountPoints": [

{

"sourceVolume": "mysql-app",

"containerPath": "/var/mysql",

"readOnly": true

},

{

"sourceVolume": "nginx-proxy-conf",

"containerPath": "/etc/nginx/conf.d",

"readOnly": true

}

]

}

]

}

The Beanstalk application deployed can be monitored in a dashboard.

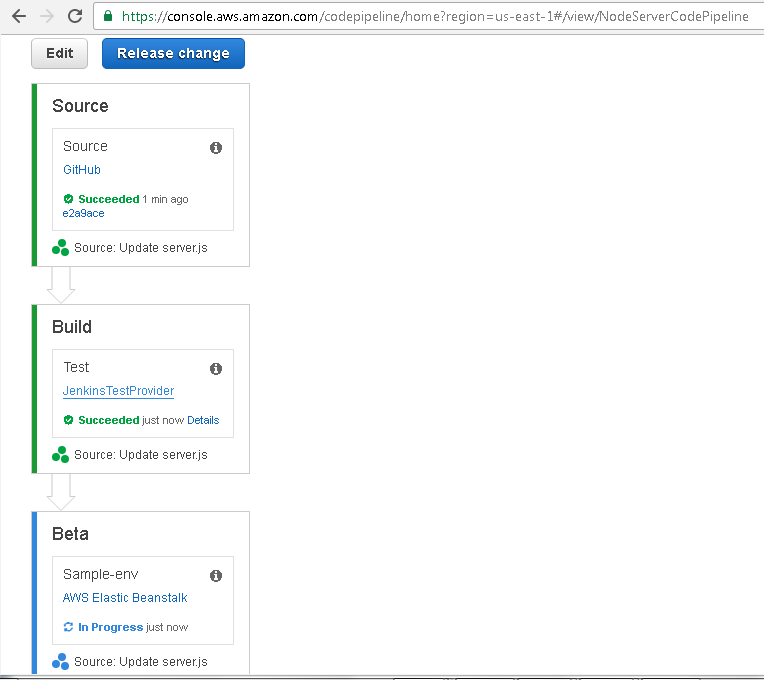

AWS CodePipeline

AWS CodePipeline is a continuous integration and continuous delivery service that can be used to build, test and deploy code every time the code is updated on the GitHub repository or CodeCommit. You can just start a CodePipeline that integrates Docker image code from a GitHub (or CodeCommit) repo, builds the code into a Docker image and tests the code using Jenkins, and deploys the Docker image to an AWS Beanstalk application deployment environment; and eliminate the need for user intervention in updates for continuous deployment of a Docker application. Every time code is updated in the source repository, the complete CodePipeline re-runs and the deployment is updated without any discontinuation in service. CodePipeline provides graphical user interfaces such as the following to monitor the progress of a CodePipeline in each of the phases: Source code integration, Build & Test, and Deployment.

In this article we discussed a new trend in Docker software development called DevOps. DevOps takes Agile software development to the next level. The article discussed several new tools and best practices for Docker software use. DevOps is an emerging trend and most suitable for Docker containerized applications.

Start the discussion at forums.toadworld.com